DBS-C01 dumps update | AWS Certified Database – Specialty Exam Materials

AWS Certified Database – Specialty Exam Materials contains PDF file and VCE exam engine in two study formats, go to leads4pass DBS-C01 dumps location https://www.leads4pass.com/aws-certified-database-specialty.html, You can see the file download button, you can choose arbitrarily to help you study in any environment, and quickly and smoothly pass the AWS Certified Database – Specialty Exam.

Candidates need to know that the AWS Certified Database – Specialty credential helps organizations find and develop talent with the key skills to implement cloud initiatives and make career leaps.

Use leads4pass DBS-C01 dumps to help you learn easily, progress quickly, and finally get AWS Certified Database – Specialty certification.

AWS Certified Database – Specialty Exam Information:

AWS Certified Database – Specialty Exam Information that all candidates should know.

Amazon DBS-C01 is the code for the AWS Certified Database – Specialty exam name. For specific information, see:

Vendor: Amazon

Exam Code: DBS-C01

Exam Name: AWS Certified Database – Specialty (DBS-C01)

Certification: AWS Certified Specialty

Length: 180 minutes to complete the exam

Price: 300 USD

Format: 65 questions; either multiple choice or multiple responses

Languages: English, Japanese, Korean, and Simplified Chinese

Delivery method: Pearson VUE and PSI; testing center or online proctored exam

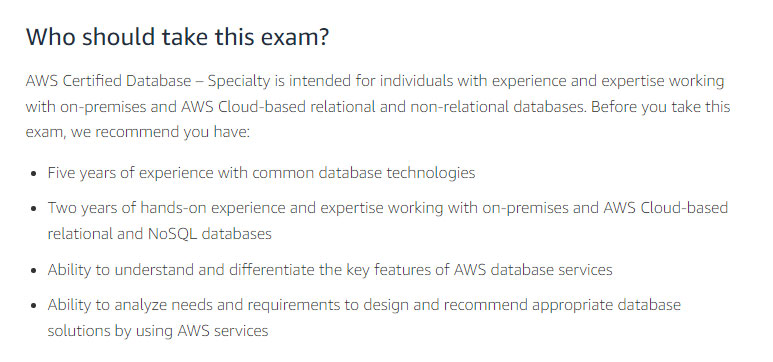

AWS Certified Database – Specialty DBS-C01 Exam Requirements:

You should understand that the AWS Specialty level is not for anyone. You must meet all of the following conditions. The AWSexamdumps blog contains learning materials for each level of AWS, regardless of level can be found here Desirable. If you are preparing to take the Specialty level exam, then you continue to study.

Next, read a portion of the free DBS-C01 Dumps exam questions and answers online:

QUESTION 1:

A company is loading sensitive data into an Amazon Aurora MySQL database. To meet compliance requirements, the company needs to enable audit logging on the Aurora MySQL DB cluster to audit database activity. This logging will include events such as connections, disconnections, queries, and tables queried. The company also needs to publish the DB logs to Amazon CloudWatch to perform real-time data analysis.

Which solution meets these requirements?

A. Modify the default option group parameters to enable Advanced Auditing. Restart the database for the changes to take effect.

B. Create a custom DB cluster parameter group. Modify the parameters for Advanced Auditing. Modify the cluster to associate the new custom DB parameter group with the Aurora MySQL DB cluster.

C. Take a snapshot of the database. Create a new DB instance, and enable custom auditing and logging to

CloudWatch. Deactivate the DB instance that has no logging.

D. Enable AWS CloudTrail for the DB instance. Create a filter that provides only connections, disconnections, queries, and tables queried.

Correct Answer: A

QUESTION 2:

A company is using Amazon RDS for PostgreSQL. The Security team wants all database connection requests to be logged and retained for 180 days. The RDS for PostgreSQL DB instance is currently using the default parameter group.

A Database Specialist has identified that setting the log_connections parameter to 1 will enable connections logging.

Which combination of steps should the Database Specialist take to meet the logging and retention requirements? (Choose two.)

A. Update the log_connections parameter in the default parameter group

B. Create a custom parameter group, update the log_connections parameter, and associate the parameter with the DB instance

C. Enable publishing of database engine logs to Amazon CloudWatch Logs and set the event expiration to 180 days

D. Enable publishing of database engine logs to an Amazon S3 bucket and set the lifecycle policy to 180 days

E. Connect to the RDS PostgreSQL host and update the log_connections parameter in the postgresql.conf file

Correct Answer: AE

Reference: https://aws.amazon.com/blogs/database/working-with-rds-and-aurora-postgresql-logs-part-1/

QUESTION 3:

A software company uses an Amazon RDS for MySQL Multi-AZ DB instance as a data store for its critical applications.

During an application upgrade process, a database specialist runs a custom SQL script that accidentally removes some of the default permissions of the master user.

What is the MOST operationally efficient way to restore the default permissions of the master user?

A. Modify the DB instance and set a new master user password.

B. Use AWS Secrets Manager to modify the master user password and restart the DB instance.

C. Create a new master user for the DB instance.

D. Review the IAM user that owns the DB instance, and adds missing permissions.

Correct Answer: A

QUESTION 4:

A company has 4 on-premises Oracle Real Application Clusters (RAC) databases. The company wants to migrate the database to AWS and reduce licensing costs. The company\’s application team wants to store JSON payloads that expire after 28 hours. The company has development capacity if code changes are required.

Which solution meets these requirements?

A. Use Amazon DynamoDB and leverage the Time to Live (TTL) feature to automatically expire the data.

B. Use Amazon RDS for Oracle with Multi-AZ. Create an AWS Lambda function to purge the expired data. Schedule the Lambda function to run daily using Amazon EventBridge.

C. Use Amazon DocumentDB with a read replica in a different Availability Zone. Use DocumentDB change streams to expire the data.

D. Use Amazon Aurora PostgreSQL with Multi-AZ and leverage the Time to Live (TTL) feature to automatically expire the data.

Correct Answer: A

QUESTION 5:

An application reads and writes data to an Amazon RDS for MySQL DB instance. A new reporting dashboard needs sread-only access to the database. When the application and reports are both under heavy load, the database experiences performance degradation. A database specialist needs to improve the database performance.

What should the database specialist do to meet these requirements?

A. Create a read replica of the DB instance. Configure the reports to connect to the replication instance endpoint.

B. Create a read replica of the DB instance. Configure the application and reports to connect to the cluster endpoint.

C. Enable Multi-AZ deployment. Configure the reports to connect to the standby replica.

D. Enable Multi-AZ deployment. Configure the application and reports to connect to the cluster endpoint.

Correct Answer: C

QUESTION 6:

A company is running its critical production workload on a 500 GB Amazon Aurora MySQL DB cluster. A database engineer must move the workload to a new Amazon Aurora Serverless MySQL DB cluster without data loss.

Which solution will accomplish the move with the LEAST downtime and the LEAST application impact?

A. Modify the existing DB cluster and update the Aurora configuration to “Serverless.”

B. Create a snapshot of the existing DB cluster and restore it to a new Aurora Serverless DB cluster.

C. Create an Aurora Serverless replica from the existing DB cluster and promote it to primary when the replica lag is minimal.

D. Replicate the data between the existing DB cluster and a new Aurora Serverless DB cluster by using AWS Database Migration Service (AWS DMS) with a change data capture (CDC) enabled.

Correct Answer: C

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/Aurora.Replication.html

QUESTION 7:

A database specialist is working on an Amazon RDS for PostgreSQL DB instance that is experiencing application performance issues due to the addition of new workloads. The database has 5 storage spaces with Provisioned IOPS.

Amazon CloudWatch metrics show that the average disk queue depth is greater than 200 and that the disk I/O response time is significantly higher than usual.

What should the database specialist do to improve the performance of the application immediately?

A. Increase the Provisioned IOPS rate on the storage.

B. Increase the available storage space.

C. Use General Purpose SSD (gp2) storage with burst credits.

D. Create a read replica to offload Read IOPS from the DB instance.

Correct Answer: C

QUESTION 8:

A retail company manages a web application that stores data in an Amazon DynamoDB table. The company is undergoing account consolidation efforts. A database engineer needs to migrate the DynamoDB table from the current AWS account to a new AWS account.

Which strategy meets these requirements with the LEAST amount of administrative work?

A. Use AWS Glue to crawl the data in the DynamoDB table. Create a job using an available blueprint to export the data to Amazon S3. Import the data from the S3 file to a DynamoDB table in the new account.

B. Create an AWS Lambda function to scan the items of the DynamoDB table in the current account and write them to a file in Amazon S3. Create another Lambda function to read the S3 file and restore the items of a DynamoDB table in the new account.

C. Use AWS Data Pipeline in the current account to export the data from the DynamoDB table to a file in Amazon S3. Use Data Pipeline to import the data from the S3 file to a DynamoDB table in the new account.

D. Configure Amazon DynamoDB Streams for the DynamoDB table in the current account. Create an AWS Lambda function to read from the stream and write to a file in Amazon S3. Create another Lambda function to read the S3 file and restore the items to a DynamoDB table in the new account.

Correct Answer: C

QUESTION 9:

A company wants to build a new invoicing service for its cloud-native application on AWS. The company has a small development team and wants to focus on service feature development and minimize operations and maintenance as much as possible. The company expects the service to handle billions of requests and millions of new records every day. The service feature requirements, including data access patterns, are well-defined. The service has an availability target of 99.99% with a milliseconds latency requirement. The database for the service will be the system of record for invoicing data.

Which database solution meets these requirements at the LOWEST cost?

A. Amazon Neptune

B. Amazon Aurora PostgreSQL Serverless

C. Amazon RDS for PostgreSQL

D. Amazon DynamoDB

Correct Answer: A

QUESTION 10:

A Database Specialist is creating a new Amazon Neptune DB cluster and is attempting to load data from Amazon S3 into the Neptune DB cluster using the Neptune bulk loader API. The Database Specialist receives the following error:

“Unable to connect to s3 endpoint. Provided source = s3://mybucket/graphdata/and region = us-east-1. Please verify your S3 configuration.”

Which combination of actions should the Database Specialist take to troubleshoot the problem? (Choose two.)

A. Check that Amazon S3 has an IAM role granting read access to Neptune

B. Check that an Amazon S3 VPC endpoint exists

C. Check that a Neptune VPC endpoint exists

D. Check that Amazon EC2 has an IAM role granting read access to Amazon S3

E. Check that Neptune has an IAM role granting read access to Amazon S3

Correct Answer: BD

Reference: https://aws.amazon.com/premiumsupport/knowledge-center/s3-could-not-connect-endpoint-url/

QUESTION 11:

A company has applications running on Amazon EC2 instances in a private subnet with no internet connectivity. The company deployed a new application that uses Amazon DynamoDB, but the application cannot connect to the DynamoDB tables. A developer already checked that all permissions are set correctly.

What should a database specialist do to resolve this issue while minimizing access to external resources?

A. Add a route to an internet gateway in the subnet\’s route table.

B. Add a route to a NAT gateway in the subnet\’s route table.

C. Assign a new security group to the EC2 instances with an outbound rule to ports 80 and 443.

D. Create a VPC endpoint for DynamoDB and add a route to the endpoint in the subnet\’s route table.

Correct Answer: B

QUESTION 12:

A company released a mobile game that quickly grew to 10 million daily active users in North America. The game\’s backend is hosted on AWS and makes extensive use of an Amazon DynamoDB table that is configured with a TTL attribute.

When an item is added or updated, its TTL is set to the current epoch time plus 600 seconds. The game logic relies on old data being purged so that it can calculate rewards points accurately. Occasionally, items are read from the table that are several hours past their TTL expiry.

How should a database specialist fix this issue?

A. Use a client library that supports the TTL functionality for DynamoDB.

B. Include a query filter expression to ignore items with an expired TTL.

C. Set the ConsistentRead parameter to true when querying the table.

D. Create a local secondary index on the TTL attribute.

Correct Answer: A

QUESTION 13:

A company uses Amazon DynamoDB as the data store for its eCommerce website. The website receives little to no traffic at night, and the majority of the traffic occurs during the day. The traffic growth during peak hours is gradual and predictable on a daily basis, but it can be orders of magnitude higher than during off-peak hours.

The company initially provisioned capacity based on its average volume during the day without accounting for the variability in traffic patterns. However, the website is experiencing a significant amount of throttling during peak hours.

The company wants to reduce the amount of throttling while minimizing costs.

What should a database specialist do to meet these requirements?

A. Use reserved capacity. Set it to the capacity levels required for peak daytime throughput.

B. Use provisioned capacity. Set it to the capacity levels required for peak daytime throughput.

C. Use provisioned capacity. Create an AWS Application Auto Scaling policy to update capacity based on consumption.

D. Use on-demand capacity.

Correct Answer: D

……

Free DBS-C01 Dumps Exam Questions and Answers Online Download: https://drive.google.com/file/d/1MaYsOoPxyLh_fVjmYhRaKnlN2G1tYgJi/view?usp=sharing

View 234 exam questions and answers.