This is the latest update for August 2025!

Currently, SAA-C03 dumps contain 1434 exam questions, distributed in PDF and VCE formats. Both include complete exam questions and answers. Both formats are convenient for learning.

If you’re just getting started, you definitely shouldn’t miss the article I wrote last time, “AWS SAA-C03 basic knowledge: certification solution.” Because the article is too long, I divided it into three stages to share. The content analyzes a complete solution for the basic knowledge. If you’re interested, you can take the time to check it out.

Today’s update features the latest exam practice questions. You can visit https://www.leads4pass.com/saa-c03.html to download the complete exam practice materials. You’re also welcome to practice the test online first.

Are you ready? Below is the free sharing:

Latest SAA-C03 dumps exam practice questions

| Number of exam questions | Compare |

| 15 (Free) | 2023 SAA-C03 Exam Questions |

Question 1:

A company has created a multi-tier application for its ecommerce website. The website uses an Application Load Balancer that resides in the public subnets, a web tier in the public subnets, and a MySQL cluster hosted on Amazon EC2 instances in the private subnets.

The MySQL database needs to retrieve product catalog and pricing information that is hosted on the internet by a third-party provider. A solutions architect must devise a strategy that maximizes security without increasing operational overhead.

What should the solutions architect do to meet these requirements?

A. Deploy a NAT instance in the VPC. Route all the internet-based traffic through the NAT instance.

B. Deploy a NAT gateway in the public subnets. Modify the private subnet route table to direct all internet- bound traffic to the NAT gateway.

C. Configure an internet gateway and attach it to the VPModify the private subnet route table to direct internet-bound traffic to the internet gateway.

D. Configure a virtual private gateway and attach it to the VPC. Modify the private subnet route table to direct internet-bound traffic to the virtual private gateway.

Correct Answer: B

NAT Gateway is safe

Question 2:

A company recently performed a lift and shift migration of its on-premises Oracle database workload to run on an Amazon EC2 memory optimized Linux instance. The EC2 Linux instance uses a 1 TB Provisioned IOPS SSD (io1) EBS volume with 64,000 IOPS.

The database storage performance after the migration is slower than the performance of the on-premises database. Which solution will improve storage performance?

A. Add more Provisioned IOPS SSD (io1) EBS volumes. Use OS commands to create a Logical Volume Management (LVM) stripe.

B. Increase the Provisioned IOPS SSD (io1) EBS volume to more than 64,000 IOPS.

C. Increase the size of the Provisioned IOPS SSD (io1) EBS volume to 2 TB.

D. Change the EC2 Linux instance to a storage optimized instance type. Do not change the Provisioned IOPS SSD (io1) EBS volume.

Correct Answer: A

Question 3:

A company is preparing a new data platform that will ingest real-time streaming data from multiple sources. The company needs to transform the data before writing the data to Amazon S3. The company needs the ability to use SQL to query the transformed data.

Which solutions will meet these requirements? (Choose two.)

A. Use Amazon Kinesis Data Streams to stream the data. Use Amazon Kinesis Data Analytics to transform the data. Use Amazon Kinesis Data Firehose to write the data to Amazon S3. Use Amazon Athena to query the transformed data from Amazon S3.

B. Use Amazon Managed Streaming for Apache Kafka (Amazon MSK) to stream the data. Use AWS Glue to transform the data and to write the data to Amazon S3. Use Amazon Athena to query the transformed data from Amazon S3.

C. Use AWS Database Migration Service (AWS DMS) to ingest the data. Use Amazon EMR to transform the data and to write the data to Amazon S3. Use Amazon Athena to query the transformed data from Amazon S3.

D. Use Amazon Managed Streaming for Apache Kafka (Amazon MSK) to stream the data. Use Amazon Kinesis Data Analytics to transform the data and to write the data to Amazon S3. Use the Amazon RDS query editor to query the transformed data from Amazon S3.

E. Use Amazon Kinesis Data Streams to stream the data. Use AWS Glue to transform the data. Use Amazon Kinesis Data Firehose to write the data to Amazon S3. Use the Amazon RDS query editor to query the transformed data from Amazon S3.

Correct Answer: AB

DMS can move data from DBs to streaming services and cannot natively handle streaming data. Hence A.B makes sense. Also AWS Glue/ETL can handle MSK streaming https://docs.aws.amazon.com/glue/latest/dg/add-job-streaming.html.

Question 4:

A company\’s website uses an Amazon EC2 instance store for its catalog of items. The company wants to make sure that the catalog is highly available and that the catalog is stored in a durable location. What should a solutions architect do to meet these requirements?

A. Move the catalog to Amazon ElastiCache for Redis.

B. Deploy a larger EC2 instance with a larger instance store.

C. Move the catalog from the instance store to Amazon S3 Glacier Deep Archive.

D. Move the catalog to an Amazon Elastic File System (Amazon EFS) file system.

Correct Answer: D

Question 5:

A company uses Amazon API Gateway to run a private gateway with two REST APIs in the same VPC. The BuyStock RESTful web service calls the CheckFunds RESTful web service to ensure that enough funds are available before a stock can be purchased.

The company has noticed in the VPC flow logs that the BuyStock RESTful web service calls the CheckFunds RESTful web service over the internet instead of through the VPC. A solutions architect must implement a solution so that the APIs communicate through the VPC.

Which solution will meet these requirements with the FEWEST changes to the code?

A. Add an X-API-Key header in the HTTP header for authorization.

B. Use an interface endpoint.

C. Use a gateway endpoint.

D. Add an Amazon Simple Queue Service (Amazon SQS) queue between the two REST APIs.

Correct Answer: B

an interface endpoint is a horizontally scaled, redundant VPC endpoint that provides private connectivity to a service. It is an elastic network interface with a private IP address that serves as an entry point for traffic destined to the AWS service. Interface endpoints are used to connect VPCs with AWS services

Question 6:

An online video game company must maintain ultra-low latency for its game servers. The game servers run on Amazon EC2 instances. The company needs a solution that can handle millions of UDP internet traffic requests each second.

Which solution will meet these requirements MOST cost-effectively?

A. Configure an Application Load Balancer with the required protocol and ports for the internet traffic. Specify the EC2 instances as the targets.

B. Configure a Gateway Load Balancer for the internet traffic. Specify the EC2 instances as the targets.

C. Configure a Network Load Balancer with the required protocol and ports for the internet traffic. Specify the EC2 instances as the targets.

D. Launch an identical set of game servers on EC2 instances in separate AWS Regions. Route internet traffic to both sets of EC2 instances.

Correct Answer: C

Question 7:

A company uses a legacy application to produce data in CSV format The legacy application stores the output data In Amazon S3 The company is deploying a new commercial off-the-shelf (COTS) application that can perform complex SQL queries to analyze data that is stored Amazon Redshift and Amazon S3 only However the COTS application cannot process the csv files that the legacy application produces.

The company cannot update the legacy application to produce data in another format The company needs to implement a solution so that the COTS application can use the data that the legacy applicator produces.

Which solution will meet these requirements with the LEAST operational overhead?

A. Create a AWS Glue extract, transform, and load (ETL) job that runs on a schedule. Configure the ETL job to process the .csv files and store the processed data in Amazon Redshit.

B. Develop a Python script that runs on Amazon EC2 instances to convert the. csv files to sql files invoke the Python script on cron schedule to store the output files in Amazon S3.

C. Create an AWS Lambda function and an Amazon DynamoDB table. Use an S3 event to invoke the Lambda function. Configure the Lambda function to perform an extract transform, and load (ETL) job to process the .csv files and store the processed data in the DynamoDB table.

D. Use Amazon EventBridge (Amazon CloudWatch Events) to launch an Amazon EMR cluster on a weekly schedule. Configure the EMR cluster to perform an extract, tractform, and load (ETL) job to process the .csv files and store the processed data in an Amazon Redshift table.

Correct Answer: A

A would be the best solution as it involves the least operational overhead. With this solution, an AWS Glue ETL job is created to process the .csv files and store the processed data directly in Amazon Redshift.

This is a serverless approach that does not require any infrastructure to be provisioned, configured, or maintained.

AWS Glue provides a fully managed, pay-as-you-go ETL service that can be easily configured to process data from S3 and load it into Amazon Redshift.

This approach allows the legacy application to continue to produce data in the CSV format that it currently uses, while providing the new COTS application with the ability to analyze the data using complex SQL queries.

Question 8:

A company\’s reporting system delivers hundreds of .csv files to an Amazon S3 bucket each day. The company must convert these files to Apache Parquet format and must store the files in a transformed data bucket.

Which solution will meet these requirements with the LEAST development effort?

A. Create an Amazon EMR cluster with Apache Spark installed. Write a Spark application to transform the data. Use EMR File System (EMRFS) to write files to the transformed data bucket.

B. Create an AWS Glue crawler to discover the data. Create an AWS Glue extract, transform, and load (ETL) job to transform the data. Specify the transformed data bucket in the output step.

C. Use AWS Batch to create a job definition with Bash syntax to transform the data and output the data to the transformed data bucket. Use the job definition to submit a job. Specify an array job as the job type.

D. Create an AWS Lambda function to transform the data and output the data to the transformed data bucket. Configure an event notification for the S3 bucket. Specify the Lambda function as the destination for the event notification.

Correct Answer: B

Question 9:

A company uses Amazon EC2 instances and Amazon Elastic Block Store (Amazon EBS) to run its self-managed database. The company has 350 TB of data spread across all EBS volumes. The company takes daily EBS snapshots and keeps the snapshots for 1 month. The daily change rate is 5% of the EBS volumes.

Because of new regulations, the company needs to keep the monthly snapshots for 7 years. The company needs to change its backup strategy to comply with the new regulations and to ensure that data is available with minimal administrative effort.

Which solution will meet these requirements MOST cost-effectively?

A. Keep the daily snapshot in the EBS snapshot standard tier for 1 month. Copy the monthly snapshot to Amazon S3 Glacier Deep Archive with a 7-year retention period.

B. Continue with the current EBS snapshot policy. Add a new policy to move the monthly snapshot to Amazon EBS Snapshots Archive with a 7-year retention period.

C. Keep the daily snapshot in the EBS snapshot standard tier for 1 month. Keep the monthly snapshot in the standard tier for 7 years. Use incremental snapshots.

D. Keep the daily snapshot in the EBS snapshot standard tier. Use EBS direct APIs to take snapshots of all the EBS volumes every month. Store the snapshots in an Amazon S3 bucket in the Infrequent Access tier for 7 years.

Correct Answer: B

Question 10:

A company has an organization in AWS Organizations that has all features enabled. The company requires that all API calls and logins in any existing or new AWS account must be audited. The company needs a managed solution to prevent additional work and to minimize costs.

The company also needs to know when any AWS account is not compliant with the AWS Foundational Security Best Practices (FSBP) standard.

Which solution will meet these requirements with the LEAST operational overhead?

A. Deploy an AWS Control Tower environment in the Organizations management account. Enable AWS Security Hub and AWS Control Tower Account Factory in the environment.

B. Deploy an AWS Control Tower environment in a dedicated Organizations member account. Enable AWS Security Hub and AWS Control Tower Account Factory in the environment.

C. Use AWS Managed Services (AMS) Accelerate to build a multi-account landing zone (MALZ). Submit an RFC to self-service provision Amazon GuardDuty in the MALZ.

D. Use AWS Managed Services (AMS) Accelerate to build a multi-account landing zone (MALZ). Submit an RFC to self-service provision AWS Security Hub in the MALZ.

Correct Answer: A

Question 11:

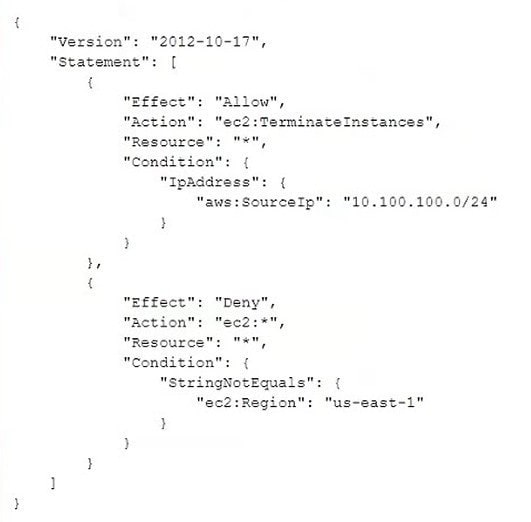

An Amazon EC2 administrator created the following policy associated with an IAM group containing several users What is the effect of this policy?

A. Users can terminate an EC2 instance in any AWS Region except us-east-1.

B. Users can terminate an EC2 instance with the IP address 10 100 100 1 in the us-east-1 Region

C. Users can terminate an EC2 instance in the us-east-1 Region when the user\’s source IP is 10.100.100.254.

D. Users cannot terminate an EC2 instance in the us-east-1 Region when the user\’s source IP is 10.100 100 254

Correct Answer: C

as the policy prevents anyone from doing any EC2 action on any region except us-east-1 and allows only users with source ip 10.100.100.0/24 to terminate instances. So user with source ip 10.100.100.254 can terminate instances in us-east1 region.

Question 12:

A company is building a web-based application running on Amazon EC2 instances in multiple Availability Zones.

The web application will provide access to a repository of text documents totaling about 900 TB in size. The company anticipates that the web application will experience periods of high demand.

A solutions architect must ensure that the storage component for the text documents can scale to meet the demand of the application at all times. The company is concerned about the overall cost of the solution.

Which storage solution meets these requirements MOST cost-effectively?

A. Amazon Elastic Block Store (Amazon EBS)

B. Amazon Elastic File System (Amazon EFS)

C. Amazon Elasticsearch Service (Amazon ES)

D. Amazon S3

Correct Answer: D

Amazon S3 is cheapest and can be accessed from anywhere.

Question 13:

A company wants to use the AWS Cloud to improve its on-premises disaster recovery (DR) configuration. The company\’s core production business application uses Microsoft SQL Server Standard, which runs on a virtual machine (VM).

The application has a recovery point objective (RPO) of 30 seconds or fewer and a recovery time objective (RTO) of 60 minutes. The DR solution needs to minimize costs wherever possible.

Which solution will meet these requirements?

A. Configure a multi-site active/active setup between the on-premises server and AWS by using Microsoft SQL Server Enterprise with Always On availability groups.

B. Configure a warm standby Amazon RDS for SQL Server database on AWS. Configure AWS Database Migration Service (AWS DMS) to use change data capture (CDC).

C. Use AWS Elastic Disaster Recovery configured to replicate disk changes to AWS as a pilot light.

D. Use third-party backup software to capture backups every night. Store a secondary set of backups in Amazon S3.

Correct Answer: C

Question 14:

To meet security requirements, a company needs to encrypt all of its application data in transit while communicating with an Amazon RDS MySQL DB instance. A recent security audit revealed that encryption at rest is enabled using AWS Key Management Service (AWS KMS), but data in transit is not enabled.

What should a solutions architect do to satisfy the security requirements?

A. Enable IAM database authentication on the database.

B. Provide self-signed certificates. Use the certificates in all connections to the RDS instance.

C. Take a snapshot of the RDS instance. Restore the snapshot to a new instance with encryption enabled.

D. Download AWS-provided root certificates. Provide the certificates in all connections to the RDS instance.

Correct Answer: D

Question 15:

A company is migrating an application from an on-premises location to Amazon Elastic Kubernetes Service (Amazon EKS). The company must use a custom subnet for pods that are in the company\’s VPC to comply with requirements. The company also needs to ensure that the pods can communicate securely within the pods\’ VPC.

Which solution will meet these requirements?

A. Configure AWS Transit Gateway to directly manage custom subnet configurations for the pods in Amazon EKS.

B. Create an AWS Direct Connect connection from the company\’s on-premises IP address ranges to the EKS pods.

C. Use the Amazon VPC CNI plugin for Kubernetes. Define custom subnets in the VPC cluster for the pods to use.

D. Implement a Kubernetes network policy that has pod anti-affinity rules to restrict pod placement to specific nodes that are within custom subnets.

Correct Answer: C

…

You should have already completed all the free exercises. These practice questions are just to help you warm up. Welcome to use the newly updated SAA-C03 dumps: https://www.leads4pass.com/saa-c03.html. Develop a regular practice plan, thoroughly practice all exam questions and answers, ensuring you 100% pass the exam. If you still have any questions, welcome to visit the FAQs.